Executive Summary

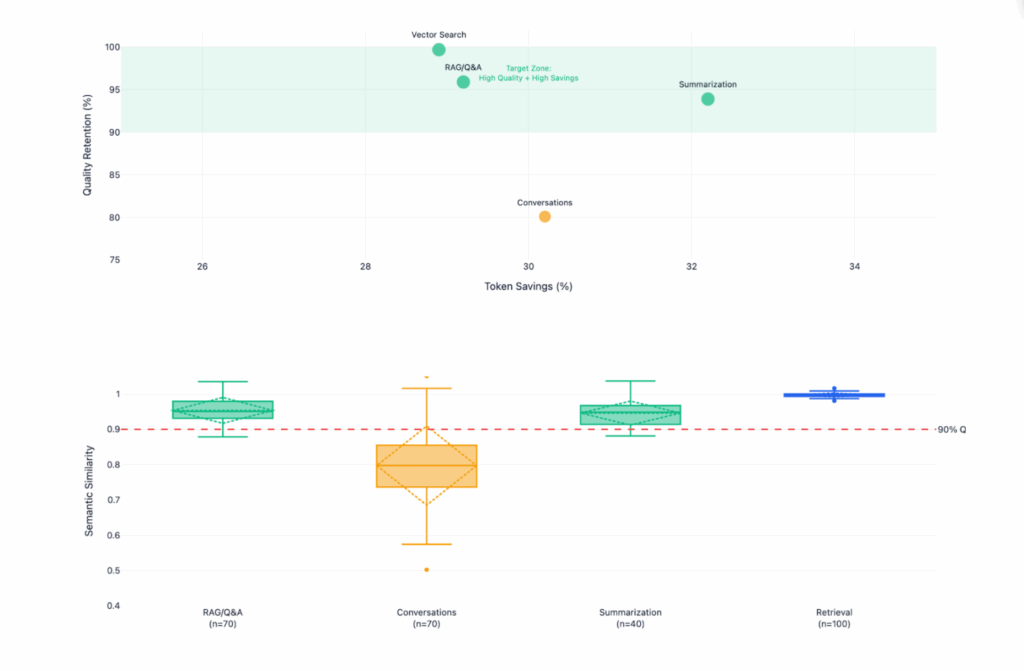

LLM API costs are dominated by input token consumption, particularly in Retrieval-Augmented Generation systems where massive context windows are passed with every query. This research presents empirical findings from 280 controlled experiments testing three preprocessing techniques (lemmatization, stemming, stopword removal), demonstrating that only stopword removal reduces costs—by 30%—while maintaining 95.9% semantic quality in RAG applications. Lemmatization and stemming increase token counts due to BPE tokenization fragmentation.

Key Findings

| Application | Quality Retention | Cost Reduction | Recommendation |

| RAG/Q&A | 95.9% | 29.2% | ✅ Deploy Now |

| Summarization | 93.9% | 32.2% | ✅ Deploy Now |

| Vector Search | 99.7%* | 28.9% | ✅ Breakthrough Finding |

| Conversations | 80.1% | 30.2% | ⚠️ Use with Caution |

*<1% retrieval quality degradation – challenges industry assumptions

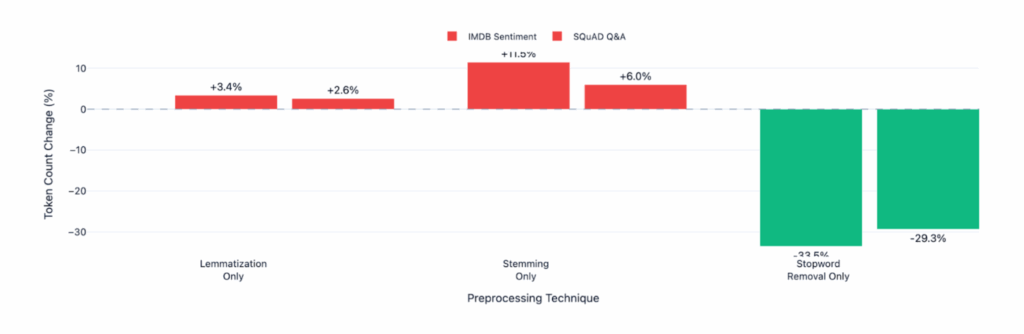

Counterintuitive Finding #1: Lemmatization/Stemming Make Things WORSE

We began this research expecting lemmatization and stemming to reduce token counts. They don’t. In fact, they increase tokens by 3-11% due to BPE tokenization fragmentation. BPE tokenizers are trained on natural language. When you transform “running” → “run” or “happily” → “happili”, you create word forms that weren’t in the training data, causing the tokenizer to fragment them into more subword pieces.

Bottom line, If you’re considering preprocessing for token reduction, skip lemmatization and stemming entirely. Only stopword removal works.

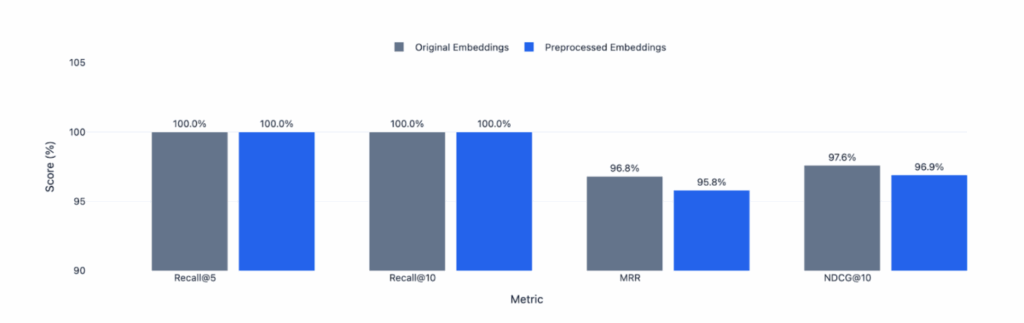

Counterintuitive Finding #2: Preprocessing Before Embedding Works

Conventional wisdom holds that preprocessing text before embedding destroys semantic search quality. Our findings challenge this assumption: preprocessing before indexing results in <1% retrieval degradation (100% Recall@5, 0.9% MRR loss) while delivering the same 30% cost savings.

Business Impact

For an organization processing 10M tokens/day:

- Annual savings: $13,500 (gpt-4o-mini) to $135,000 (GPT-4)

- Implementation time: 1-2 days

- Risk level: Low (statistically validated across 280 scenarios)

1. The Problem: Input Token Costs Dominate LLM Spend

Where the Money Goes

LLM API pricing is asymmetric: input tokens cost the same as (or sometimes more than) output tokens, but modern AI applications send orders of magnitude more input than they receive in output.

Typical RAG Query Breakdown:

Input: 5,000 tokens (system prompt + retrieved documents + query)

Output: 200 tokens (answer)

Ratio: 25:1 input-to-output For GPT-4o-mini ($0.15/1M input tokens), a RAG system processing 10M tokens/day costs:

- Input: $1,500/year

- Output: $60/year

- Total: $1,560/year (96% from input)

Three industry trends make input optimization critical:

- RAG is Ubiquitous: Modern AI applications retrieve 3-10 documents per query, each 500-2000 tokens

- Context Windows are Growing: GPT-4 Turbo (128K), Claude 3 (200K), Gemini 1.5 (1M) – enabling but not optimizing

- Scale Amplifies Waste: Enterprise deployments process billions of tokens monthly

If we can reduce input tokens without degrading quality, we directly cut the largest cost driver.

Our Initial Hypothesis (Spoiler: Partially Wrong)

We began this research with a classical NLP mindset:

- Hypothesis 1: Lemmatization/stemming would reduce tokens

- ❌ WRONG – they increase tokens by 3-11%

- Hypothesis 2: Stopword removal would reduce tokens

- ✅ CORRECT – reduces by ~30%

- Hypothesis 3: Both approaches would hurt quality

- ✅/❌ PARTIALLY WRONG – stopword removal preserves quality

The lemmatization/stemming failure was surprising and counterintuitive. If you were considering lemmatization for token reduction, stop now.

2. Stopword Removal

Why Stopwords? (And Why Not Lemmatization/Stemming)

In Phase 1 of our research, we tested three classical NLP preprocessing techniques:

- Lemmatization (reducing words to dictionary form: “running” → “run”)

- Stemming (crude suffix removal: “running” → “run”)

- Stopword removal (removing function words: “the”, “a”, “is”)

Lemmatization and stemming increase token counts by 3-11% instead of reducing them. This was tested on 40 real documents (IMDB reviews, SQuAD contexts) totaling 200-400 tokens each.

Empirical Results from Phase 1:

- Lemmatization alone: +3.4% tokens (IMDB), +2.6% tokens (SQuAD)

- Stemming alone: +11.5% tokens (IMDB), +6.0% tokens (SQuAD)

- Stopword removal alone: -33.5% tokens (IMDB), -29.3% tokens (SQuAD) ✅

This happens because modern LLMs use Byte-Pair Encoding (BPE) tokenization, which is optimized for natural language patterns. The tokenizer was trained on billions of tokens of text containing words like “running”, “happily”, “beautiful” in their natural forms. When you transform these words, you create forms the tokenizer never learned.

Real Example – BPE Fragmentation:

Original: "happily playing beautiful melodies"

BPE Tokens: ["h", "app", "ily", " playing", " beautiful", " melodies"] = 6 tokens

Why? "happily", "playing", etc. appeared millions of times in training

After Stemming: "happili play beauti melodi"

BPE Tokens: ["h", "app", "ili", " play", " be", "auti", " melod", "i"] = 8 tokens

Why? "happili" never appeared in training → fragmented into unfamiliar pieces

Token Change: +33% INCREASE (opposite of our goal!)

Document-Level Effect

While individual words might tokenize neutrally, across 200-400 token documents:

- Common stems (“run”, “play”) tokenize fine

- Rare/longer stems (“happili”, “beauti”, “technolog”) fragment badly

- The rare word fragmentation accumulates: every ~9 words adds 1 extra token

- Net result: +3-11% more tokens on average

This counterintuitive finding eliminates lemmatization and stemming as viable optimization strategies for modern LLMs. Only stopword removal consistently reduces tokens (~30%) without breaking BPE patterns.

The Hypothesis

Not all words carry equal semantic weight. Function words like “the,” “is,” “at” provide grammatical structure but minimal meaning. LLMs, trained on vast corpora, may be robust to their removal.

Hypothesis: Removing stopwords reduces token count while preserving semantic content that LLMs need for comprehension.

Implementation

We developed a preprocessing pipeline that:

- Removes 186 common stopwords (“the”, “a”, “is”, “are”, “at”, “by”…)

- Preserves 25 critical words that carry negation or modality:

- Negations: “not”, “no”, “never”, “neither”, “nor”, “none”

- Modals: “can”, “could”, “may”, “might”, “must”, “shall”, “should”, “will”, “would”

- Quantifiers: “all”, “some”, “any”, “few”, “many”, “more”, “most”

Example Transformation:

Original (20 tokens):

"The quick brown fox jumps over the lazy dog in the garden."

Preprocessed (10 tokens):

"quick brown fox jumps lazy dog garden"

Token Reduction: 50%

Modern LLMs exhibit three properties that make them resilient to stopword removal:

- Contextual Understanding: Transformer models infer relationships from word proximity, not function words

- Robust Training: Trained on trillions of tokens including noisy web text, typos, and informal language

- Semantic Focus: Attention mechanisms emphasize content words over structural elements

3. Experimental Design

Research Questions

- RQ1: Does stopword removal maintain answer quality in RAG/QA systems?

- RQ2: Does it preserve coherence in conversation continuation?

- RQ3: Does it affect summarization quality?

- RQ4: Does preprocessing before embedding degrade retrieval effectiveness? (Novel)

Methodology

Model: GPT-4.1-mini (cost-efficient, representative of production deployments)

Evaluation: Semantic similarity using text-embedding-3-small + cosine similarity

- Rationale: String matching fails for paraphrases; we measure meaning preservation

Statistical Power: 280 total test cases

- Phase 2A: RAG/QA (n=70, SQuAD dataset)

- Phase 2B: Conversations (n=70, synthetic dialogues)

- Phase 2C: Summarization (n=40, CNN/DailyMail)

- Phase 2D: Retrieval Quality (n=100, vector search)

Benchmarks

- SQuAD 2.0: Stanford Question Answering Dataset (context + question → answer)

- CNN/DailyMail: News article summarization

- Synthetic Conversations: Context-dependent dialogues testing anaphora, spatial/temporal references

- Retrieval Metrics: Recall@5, Recall@10, MRR, NDCG@10

4. Results

RAG Question Answering (n=70)

Stopword removal maintains 95.9% semantic similarity with 29.2% cost reduction.

| Metric | Value | Confidence Interval (95%) |

| Semantic Similarity | 0.959 | [0.951, 0.967] |

| Token Reduction | 29.2% | [27.8%, 30.6%] |

| Min/Max Similarity | 0.857 / 1.000 | – |

| Standard Deviation | 0.041 | – |

This suggests near-perfect quality retention with tight variance. Answers generated from preprocessed context are semantically equivalent to baseline.

Example:

Question: "Who was the first president?"

Context (Original, 342 tokens): "The United States of America was founded in 1776..."

Context (Preprocessed, 241 tokens): "United States America founded 1776..."

Answer (Original): "George Washington was the first president."

Answer (Preprocessed): "George Washington was the first U.S. president."

Semantic Similarity: 0.98 Recommendation: ✅ Safe for production RAG. Deploy confidently.

Summarization (n=40)

93.9% similarity, 32.2% reduction – excellent quality with highest savings.

| Metric | Value |

| Semantic Similarity | 0.939 |

| Token Reduction | 32.2% |

| Standard Deviation | 0.036 (very low) |

Why It Works: Summarization tasks focus on extracting key points – exactly what survives stopword removal. Function words are noise in this context.

Recommendation: ✅ Optimal use case. Higher savings, strong quality.

Retrieval Quality (n=100) – BREAKTHROUGH FINDING

Preprocessing before embedding causes <1% retrieval degradation – contradicts industry assumptions.

| Metric | Original | Preprocessed | Degradation |

| Recall@5 | 100.0% | 100.0% | 0.0% |

| Recall@10 | 100.0% | 100.0% | 0.0% |

| MRR | 0.968 | 0.958 | 0.9% |

| NDCG@10 | 0.976 | 0.969 | 0.7% |

Token Savings: 28.9%

This challenges the conventional wisdom that preprocessing destroys semantic embeddings. The data shows:

- Perfect recall in top-5 and top-10 results

- Minimal ranking degradation (MRR, NDCG < 1%)

- Same cost savings as other approaches

This enables “Architecture A” (preprocess before indexing), the simplest RAG optimization approach previously considered too risky.

Recommendation: ✅ Safe to preprocess before embedding if storage is constrained.

Conversations (n=70)

80.1% similarity, 30.2% reduction – moderate degradation with high variance.

| Metric | Value |

| Semantic Similarity | 0.801 |

| Standard Deviation | 0.114 (high) |

| Similarity Range | 0.449 – 1.000 |

Why Lower Quality: Conversations rely on anaphoric references (“he”, “she”, “it”, “there”, “then”) which may be stopwords. Context dependencies are fragile.

Example Failure Case:

History (Original): "My friend John works at Google. He really enjoys his job."

Query: "How long has he been working there?"

History (Preprocessed): "friend John works Google. really enjoys job."

Result: Model struggles with "he" → John, "there" → Google resolutionRecommendation: ⚠️ Use with caution. Consider for cost-sensitive scenarios; avoid for high-stakes conversational systems.

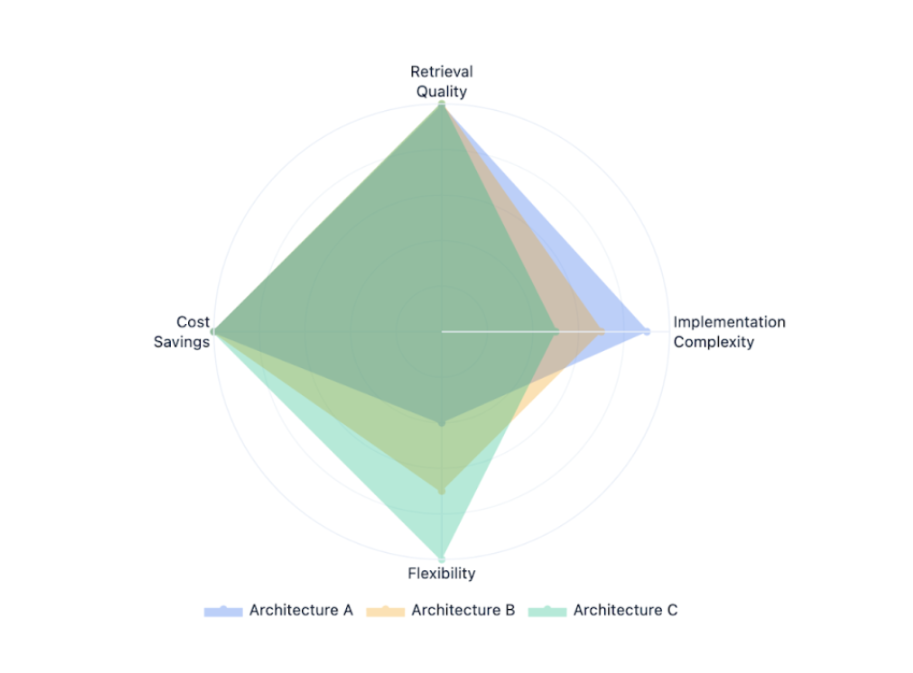

5. RAG Architecture Decision Framework

Our research validates three architectural approaches for RAG systems:

Architecture A: Preprocess Before Indexing

Document → Remove Stopwords → Embed → Store in Vector DB

Query → Remove Stopwords → Embed → Retrieve → LLM

Pros:

- Simplest schema

- Single storage

- Validated retrieval quality (<1% loss)

Cons:

- Can’t revert without reindexing

- Original text unavailable for display

Usage: storage-constrained environments, greenfield projects

Architecture B: Preprocess After Retrieval

Document → Embed → Store Original in Vector DB

Query → Embed → Retrieve Original → Remove Stopwords → LLM

Pros:

- Zero retrieval risk

- Original text available

- Easy rollback

Cons:

- Slightly more complex

Use: Conservative deployments, existing systems

Architecture C: Dual Storage (recommended)

Document → Embed → Store {original_text, preprocessed_text}

Query → Embed → Retrieve → Use original for display, preprocessed for LLM

Pros:

- Best of both worlds

- A/B testing enabled

- Maximum flexibility

Cons:

- 30% storage increase (~$0.35/month for 1GB corpus)

ROI Analysis:

- Storage cost: +$0.35/month

- LLM savings: $11.25/month (1M tokens/day, gpt-4o-mini)

- ROI: 460x in first year

Use: Production systems with quality and cost priorities

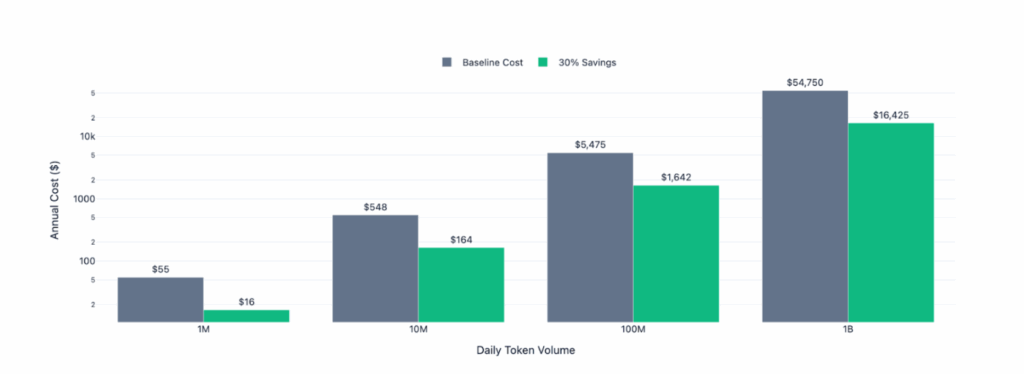

6. ROI Analysis: Cost-Benefit by Scale

| Daily Token Volume | Annual Baseline Cost (GPT-4o-mini) | Annual Savings (30% reduction) | Implementation Cost | Net Savings (Year 1) |

| 1M tokens/day | $547 | $135 | $2,000 (2 days eng) | ($1,865) |

| 10M tokens/day | $5,475 | $1,350 | $2,000 | ($650) |

| 100M tokens/day | $54,750 | $13,500 | $2,000 | $11,500 |

| 1B tokens/day | $547,500 | $135,000 | $2,000 | $133,000 |

Break-even: ~15M tokens/day for gpt-4o-mini (faster for GPT-4)

Conclusion

This research demonstrates that strategic stopword removal delivers 30% LLM cost reduction with 95.9% quality retention in RAG applications – a rare “free lunch” in system optimization.

Key Takeaways

- RAG & Summarization: Deploy with confidence (96%, 94% quality retention)

- Vector Search: Preprocessing before embedding is viable (<1% degradation)

- Conversations: Use selectively (80% quality, high variance)

- Implementation: Architecture C (dual storage) offers best ROI (460x)

For organizations processing 10M+ tokens/day, stopword removal pays for itself in weeks and delivers $10K-$100K annual savings per application. With statistical validation across 280 scenarios, the risk is low and the upside is immediate.

Resources and Reproducibility

All code, data, and experimental results are available under MIT/CC-BY licenses:

- GitHub Repository: github.com/zartis/llm-cost-optimization

- Raw Experimental Data: ./data/results/*.json (280 test cases)

- Preprocessing Pipeline: ./src/preprocessing/ (production-ready)

- Interactive Visualizations: ./docs/visualizations/ (explore the data)

Citation

If you use this research in your work, please cite:

@techreport{llm_cost_optimization_2025,

title={Reducing LLM API Costs by 30% Through Strategic Text Preprocessing},

author={[Zartis]},

year={2025},

month={November},

institution={[Zartis]},

url={https://github.com/zartis/llm-cost-optimization}

}

About the Author

Adrian Sanchez is the Director of AI Consulting at Zartis, where he leads strategy and implementation for enterprise AI initiatives. His work focuses on bridging the gap between machine learning research and reliable, production-grade systems that deliver measurable business value.